Whether you’re an app developer or a business offering services through APIs, you’ll inevitably encounter the concept of rate limiting. Much of this is due to the sheer volume of data transacted today. As such, managing traffic flow must be a prime consideration.

This article will delve into rate limiting, how it works, the role of proxy servers, and why it’s an indispensable part of modern web architecture. We’ll also consider whether it’s a method you need or something good to know.

Table of Contents

- What is Rate Limiting?

- Rate Limiting With Proxy Servers

- Rate Limiting Best Practices

- Who Uses Rate Limiting and Why?

- Final Thoughts: Do You Need Rate Limiting?

1. What is Rate Limiting?

Rate limiting is a fundamental concept in managing web traffic. It is especially relevant in the context of APIs and web services. At its core, rate limiting controls the number of requests a user or client can make to a server within a specific time frame.

This is crucial for maintaining a server’s performance and stability, ensuring fair resource distribution, and protecting against abuse such as distributed-denial-of-service (DDoS) attacks.

a. How Rate Limiting Works

Rate limiting works by setting predefined limits on the number of requests that can be made by a user, IP address, or API key within a certain period. When the number of requests exceeds this limit, the server can respond in several ways:

- Rejecting the request

- Delaying it

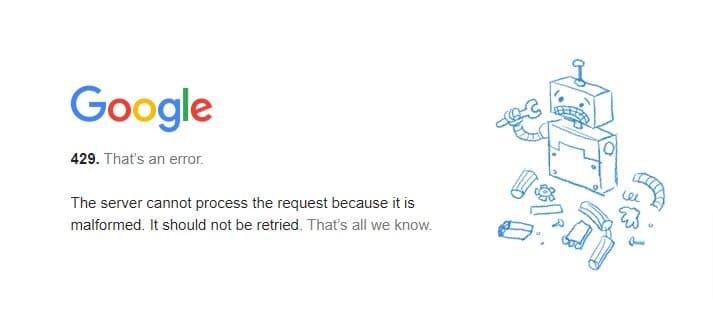

- Returning an error message (such as HTTP 429 “Too Many Requests.)

Implementing rate limiting involves tracking and comparing each client request against the set limit. If the limit is reached, the server triggers the appropriate response. The main goal is to prevent any single client from overwhelming the server.

b. Common Rate Limiting Methods

There are several methods to implement rate limiting, each with its own advantages and use cases:

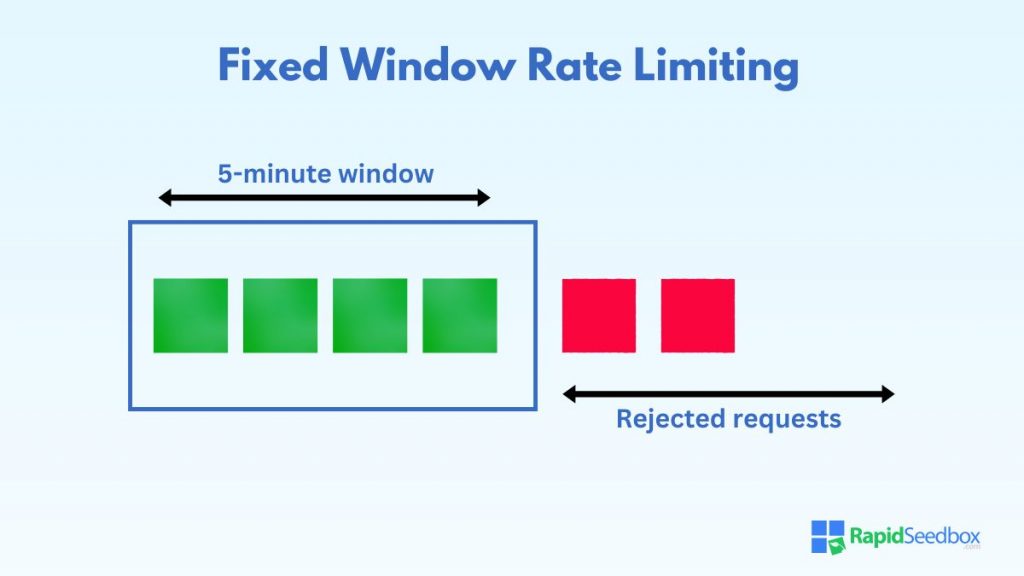

- Fixed Window Limiting: The simplest form of rate limiting. The server counts requests in fixed intervals. Excess requests are rejected until the next cycle begins.

- Sliding Window Limiting: This method allows for more flexibility by calculating the limit based on a sliding window of time, providing smoother control.

- Token Bucket Algorithm: Each incoming request consumes a token. Requests are served until the token bucket is empty.

- Leaky Bucket Algorithm: Ensures a consistent request rate by “leaking” requests steadily. Requests exceeding the rate are queued or dropped.

2. Rate Limiting With Proxy Servers

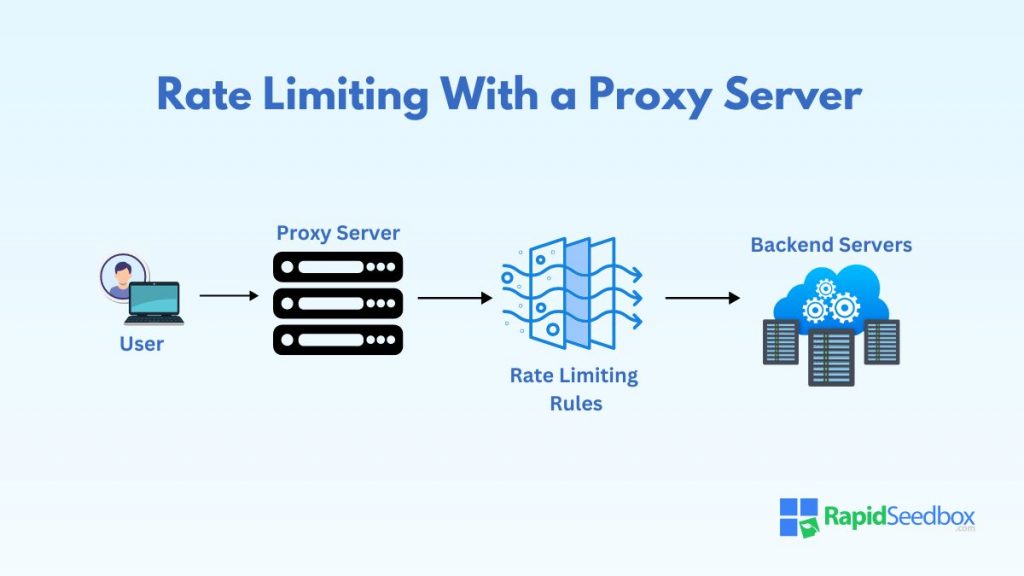

Proxy servers are crucial in managing web traffic. Because they act as intermediaries between clients and servers, proxy servers are a key to effective traffic distribution. As such, they are an excellent platform for implementing rate limiting.

This role that they play allows you to use proxy servers to several advantages:

a. Traffic Control and Load Balancing

One of the primary functions of proxy servers is to manage and distribute traffic across multiple backend servers.

By implementing rate limiting at the proxy level, you can control the flow of requests before they reach the backend, ensuring that no single server is overwhelmed by too many requests. This is especially important when backend servers must handle large traffic volumes.

Proxy servers can also use limiting to complement load balancing. By setting request limits, proxies can ensure that traffic is evenly distributed across servers, preventing any single server from becoming a bottleneck.

b. Protecting Backend Servers

Rate limiting at the proxy server level protects backend servers from excessive traffic, particularly during DDoS attacks. Enforcing rate limits at the proxy allows you to filter out potentially malicious traffic before it reaches the backend servers.

Moreover, proxy servers can differentiate between legitimate traffic and suspicious patterns, applying stricter limits on the latter. This selective behavior helps protect critical resources while allowing genuine users to continue accessing services.

c. Managing API Requests

Proxy servers often act as API gateways, handling requests from multiple clients before forwarding them to the backend services. Rate limiting is essential in this scenario to ensure that API usage is fair and that no single client consumes excessive resources.

API providers can enforce quotas and throttling by limiting at the proxy level. This is a critical method of ensuring service agreement limits are adhered to. It is essential for public APIs, where diverse clients must be managed efficiently.

Proxies can also implement advanced features like dynamic limits based on client behavior. This allows more granular control over API usage. You can ensure that high-priority clients receive the necessary resources while preventing abuse from lower-priority users.

d. Enhancing Security

Rate limiting via proxy servers can significantly enhance the security of web applications. By limiting the rate of requests, proxies can prevent various types of attacks, such as brute force on login systems or web scraping.

Rate limiting at the proxy level also adds extra protection against clients using proxies to mask their IP addresses. Even if the client’s IP is hidden, the proxy server can enforce rate limits based on other factors, such as session tokens or API keys.

e. Examples and Implementation Challenges

Several popular proxy servers, such as NGINX and HAProxy, offer built-in rate limiting features. These tools provide flexible and powerful ways to manage traffic, whether you’re handling web requests, API calls, or both.

NGINX: Widely used as a reverse proxy server and can be configured to limit the rate of incoming requests based on various parameters, such as IP address or request type. Its rate limiting module allows for straightforward implementation of fixed and dynamic rate limits.

HAProxy: Another robust proxy server that offers extensive rate limiting capabilities. It allows administrators to apply rate limits based on various criteria, including source IP, destination, and request headers. HAProxy is a popular choice for managing complex traffic patterns.

Optimize API Performance with RapidSeedbox

Managing API traffic is a breeze with RapidSeedbox proxy servers. Our proxies enable you to enforce precise rate limits, ensuring all clients have fair access to your services without risking server overload.

Optimize your API performance today!

3. Rate Limiting Best Practices

Rate limiting can be a powerful feature. However, that’s provided it is properly implemented. Poorly implemented limits can lead to frustration and service disruptions. Here are some best practices to guide you in implementing rate limiting:

a. Analyze Traffic Patterns

Before setting rate limits, it’s crucial to understand your application’s traffic patterns. Analyzing your traffic data will help you identify peak usage times, typical request rates, and potential outliers. For example,

- Peak Traffic Analysis: Identify times when traffic spikes and consider implementing higher limits during these periods to accommodate the increased load.

- User Segmentation: Segment users based on their behavior and needs. For instance, you might allow higher limits for trusted users or premium customers.

b. Implement Adaptive Rate Limiting

Static limits can be too rigid or restrictive during off-peak times or too lenient during peak loads. Adaptive limiting makes adjustments dynamically based on current server load, user behavior, or other real-time factors.

- Dynamic Adjustment: Use metrics like CPU usage, response times, and error rates to adjust limits on the fly. For example, they can be tightened temporarily if server load increases.

- Machine Learning Integration: Consider integrating machine learning models to predict traffic spikes and automatically adjust rate limits in anticipation.

c. Communicate Rate Limits Clearly

One key aspect of user experience is transparency. Users should be aware of the rate limits in place, especially when interacting with APIs or services that might restrict their access. Clear communication helps prevent frustration and allows users to plan their usage accordingly.

- Documentation: Document limits in your API documentation or user guidelines. Include details on the limits, how they are enforced, and what users can do if they exceed them.

- Rate Limit Headers: Implement HTTP headers that inform users of their current usage and remaining quota. For example, the X-RateLimit-Limit, X-RateLimit-Remaining, and X-RateLimit-Reset headers can provide this information in real-time.

d. Provide Graceful Handling of Rate Limit Exceedances

When users exceed the limits, how you handle these situations can significantly impact their experience. A well-designed system will gracefully handle these cases, providing clear feedback and options for the user.

- HTTP 429 Responses: When rejecting requests due to limits, use the HTTP 429 “Too Many Requests” status code. Include a clear message explaining why the request was denied and when the user can retry.

- Retry-After Header: Include the Retry-After header in your responses to indicate how long the user should wait before making another request. This helps users understand when to resume their activities without further rejection.

- Grace Periods: Consider implementing a grace period or a soft limit that warns users before enforcing hard limits. This can allow them to adjust their usage without immediately being cut off.

e. Monitor and Adjust Rate Limits Regularly

Rate limiting is not a set-it-and-forget-it solution. Regular monitoring and adjustment are necessary to ensure the limits remain effective as traffic patterns and user behavior evolve.

- Monitoring Tools: Use monitoring tools to track the effectiveness of your rate limits. Look for metrics such as the number of rejected requests, user feedback, and overall server performance.

- Feedback Loops: Establish feedback loops where you regularly review and adjust limits based on the data collected. Consider user feedback and server performance metrics to fine-tune your approach.

- Incident Response: Be prepared to quickly adjust limits in response to incidents such as unexpected traffic spikes or DDoS attacks. Having pre-defined response plans can help mitigate these situations without causing service disruptions.

4. Who Uses Rate Limiting and Why?

Today, almost all websites implement rate limiting at some level. Its application is broader than large enterprises. Small businesses, developers, and individual users can benefit from understanding and implementing this methodology.

Here’s a closer look at who uses rate limiting and why it is essential for them.

a. Web Service Providers

Web service providers, particularly those offering APIs, are among the primary users of rate limiting. These providers include companies that provide cloud services, software-as-a-service (SaaS) platforms, and various online tools that rely on API calls to deliver user functionality.

- Preventing Abuse: Web service providers can stop users from attempting to make excessive API requests. This is particularly important in avoiding resource exhaustion.

- Ensuring Service Availability: Helps maintain service availability by preventing a single user or client from monopolizing server resources. This ensures the service remains responsive and available.

- Managing API Tiers: Allows web service providers to enforce the terms of each customer tier.

b. E-commerce Platforms

E-commerce platforms rely heavily on web traffic from customers browsing products and automated systems, such as bots, that may interact with their websites. Rate limiting plays a crucial role in managing this traffic effectively.

- Mitigating Bot Traffic: Bots that attempt to scrape data, such as pricing information, or engage in fraudulent activities often target e-commerce sites. Rate limiting helps reduce these issues by restricting requests.

- Preventing Checkout Abandonment: Prevents server crashes and reduces the likelihood of checkout abandonment due to slow or unresponsive pages.

- Fair Access During High-Demand Events: Ensures that all customers have a fair chance of accessing the site rather than allowing a few users to dominate the traffic.

c. Social Media Platforms

Social media platforms deal with massive amounts of user-generated content and interactions. Rate limiting is crucial for managing this traffic volume and ensuring the platform remains functional for all users.

- Controlling API Usage: Manages the amount of data that third-party apps can access, preventing them from overwhelming the system with too many requests.

- Preventing Spam and Abuse: This helps prevent users from spamming the platform with excessive posts, comments, or messages.

- Managing Data Scraping: Social media platforms are often targets for data scraping, where external entities attempt to gather large amounts of user data. Rate limiting effectively curbs these efforts, protecting user privacy and the platform’s integrity.

d. Financial Institutions

Financial institutions, including banks, payment processors, and trading platforms, use rate limiting to secure their systems and ensure compliance with industry regulations.

- Enhancing Security: Can protect their systems from unauthorized access by limiting the number of login attempts or transaction requests.

- Regulatory Compliance: Helps financial institutions comply with regulations by controlling data flow and preventing unauthorized data transfers.

- Ensuring System Stability: High-frequency trading platforms and online banking services experience rapid and sometimes unpredictable spikes in traffic. Rate limiting helps these institutions maintain system stability during such spikes.

e. CDNs and ISPs

CDNs and ISPs are responsible for delivering content and internet access to users. Rate limiting is a crucial tool they use to manage bandwidth and ensure consistent service quality.

- Managing Bandwidth Usage: Helps control the amount of data users can transfer within a certain period. This helps manage network congestion and ensures consistent service quality.

- Preventing Network Abuse: CDNs and ISPs can avoid network abuse, such as denial-of-service attacks or excessive bandwidth use by a single user, which could degrade the service for others.

- Optimizing Content Delivery: Ensures that high-demand content is distributed efficiently and the network remains stable during peak usage.

5. Final Thoughts: Do You Need Rate Limiting?

As explored throughout this article, rate limiting is crucial in various industries. But the question remains: Do you need rate limiting for your systems?

The answer largely depends on the nature of your online services. If your application handles significant traffic, particularly API requests or user interactions, rate limiting can be invaluable in maintaining service quality and preventing abuse.

However, not every application requires aggressive limiting. For small-scale services with predictable traffic patterns, the overhead of implementing and managing limits might outweigh the benefits.

Control Your Network with RapidSeedbox

Take full control of your network traffic with RapidSeedbox’s powerful proxy servers. Our rate limiting features allow you to fine-tune access, manage bandwidth, and ensure that your services are accessible to all users without interruption.

0Comments